How to use NIPA GPU cluster

Before explaining the procedure, the following is the summary.

wesuggestsigning/slp_edu:latest

https://nipapublic.gabia.com

https://docs.kakaocloud.com/ha-gpu

Procedure

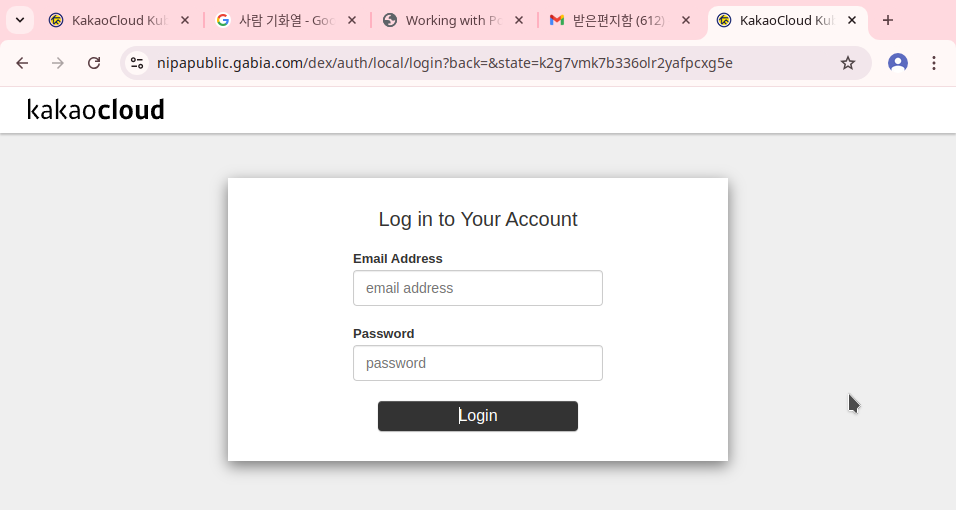

- Log on to the website.

https://nipapublic.gabia.com

Then the following screen will appear:

You need to use your ID and password given from NIPA.

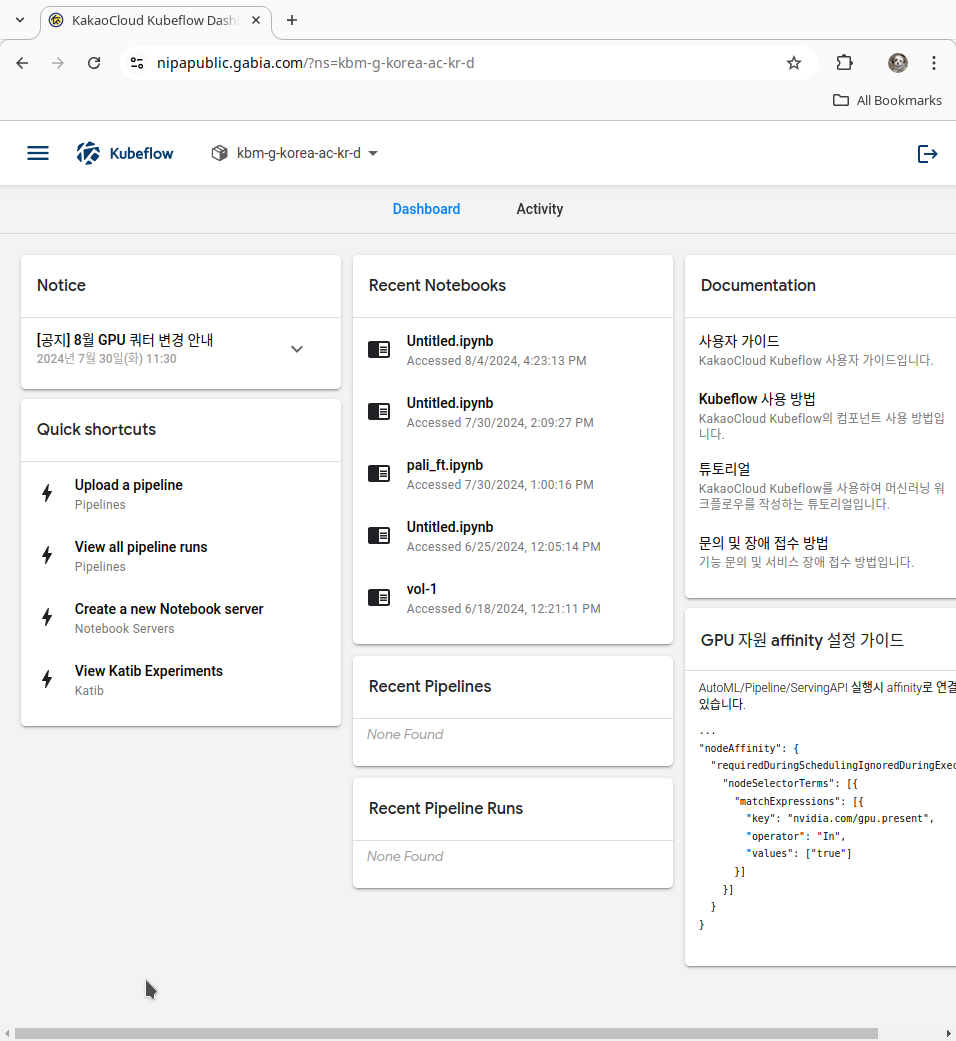

- Note that the following documents which may be found in the “Documentation” section may be useful.

- 사용자 가이드 (Can be directly accessed at:

https://docs.kakaocloud.com/ha-gpu) - Kubeflow 사용방법 (Can be directly accessed at:

https://docs.kakaocloud.com/ha-gpu) - 튜토리얼 (Can be directly accessed at:

https://docs.kakaocloud.com/ha-gpu)

- 사용자 가이드 (Can be directly accessed at:

- Creating the note book.

SentencePiece is a toolkit for sub-word tokenization. In this tutorial, we assume that you are using Ubuntu Linux. Link to the SentencePiece github page

conda config --add channels conda-forge

conda config --set channel_priority strict

conda install libsentencepiece sentencepiece sentencepiece-python sentencepiece-spm

or

pip install sentencepiece

STOP data set

In this tutorial, we will use the STOP dataset. Information about this dataset may be found at the following link: https://facebookresearch.github.io/spoken_task_oriented_parsing/ Stop dataset was developed for benchmarking the Spoken Language Understanding (SLU) task.

You may download it from the following link: Link to the download page

Algorithm

Running it

spm_train --input=train-all.trans_without_uttid.txt \

--model_prefix=model_unigram_256 \

--vocab_size=256 \

--character_coverage=1.0 \

--model_type=unigram

Doing tokenization and detokenization

Summary

In this page, we discuss how to define a model using Keras.